“With enough eyes, all bugs are shallow”

Linus’s Law

“With enough observability, all outages are transient”

Popoola’s law

Introduction

A team without proven observability and on-call strategies will invariably suffer from reactive disruptions; mitigating outages will be painful, like finding a needle in a haystack while blindfolded. I know because I have stabilized teams going through chaotic times:

- Undetected degradations causing customer pain

- Never-ending tsunamis of noisy alerts

- Unsustainable and unbearable round-the-clock on-call pressure

This post is for managers grasping at straws because their team is constantly fighting fires, and burnt-out engineers are quitting. It is also helpful for leaders who desire to add a proven technique to their toolbox. Who does not like a high-performing team that purrs like a well-oiled machine?

The four Ds of perpetually-reactive teams

- Disconnect: There is a chasm between the organizational perception of customer experience and the actual customer experience. Some classic symptoms of the disconnect between perception and reality include:

- A constant stream of customer complaints despite monitoring systems reporting a ‘healthy’ state.

- A lack of proactive failure detection; outages are only detected when customers complain.

- Engineers struggling to articulate the customer impact of pages.

- An engineer accidentally discovering broken features.

- Distrust: A big red flag is a lack of confidence in triggered alerts. The more false alarms a monitoring system throws, the lower the conviction engineers have in the system. Unfortunately, this low signal-to-noise state accelerates the disrepair cycle; engineers jaded by monitors constantly crying wolf stop paying attention. At this stage, you should grab some popcorn and wait for the inevitable massive outage.

- Disorganization: There are no prescribed best practices, and the ‘recommended approach’ depends on whom you engage. This lack of organized, clear guidance manifests in a proliferation of monitoring frameworks, a dearth of battle-tested tooling, and ad-hoc outage remediation practices. Invariably, engineers resort to hit-and-miss solutions like restart-and-pray-it-goes away (e.g., restart the PC, and the ‘issue’ should go away).

- Disrepair: Tools, systems, and alerts have fallen into disrepair. The reasons vary from services being in maintenance mode, lack of know-how due to attrition, and half-dead/half-alive projects.

Don’t miss the next post!

Subscribe to get regular posts on leadership methodologies for high-impact outcomes.

How monitoring strategies fail customers

Monitoring aims to ensure a great customer experience by proactively nipping issues in the bud or quickly mitigating uncaught issues. Most methods miss the mark – not due to tooling gaps but rather due to a misapplication of the tool, which signifies a misunderstanding of the core problem.

A good symptom of this issue is that the number of teams stuck in firefighting matches the number of observability tools; indeed, if it was purely a tooling issue, adopting Prometheus/Nagios/geneva/kusto/xyz should solve the problem.

What your customers want

While using a word processor, I want to write and get my work done; I don’t care about memory usage or processor speed. Thus, the occasional freeze or crash is tolerable – I grumble, restart the app, and resume work. However, I become frustrated if I lose my work or if the symptoms persist after a restart, refresh, or reboot.

Customers only care about glitches once they cause non-reversible damage. The occasional crash, YouTube glitch, or PC freeze is bearable because it is transient.

Making your customers happy

An observability strategy must answer the critical question: are your customers happy? Answering the question requires knowing your customers and knowing what makes them happy. A plan that answers this question will permeate the observability stack and influence coherent operational practices.

Customer happiness examples

- Product teams: performance, reliability, durability. See no surprises post for more.

- Platform teams: Don’t stop at the immediate groups consuming your services; try to learn about the customers of those partner teams.

Some example proxy metrics for customer dissatisfaction

- Reliability: Failures and unreliable outcomes due to errors in internal systems (e.g., error dialogs).

- Latency: Operations taking longer than expected (e.g., a request taking 10 seconds instead of 2 seconds).

- Usability: Internal errors that should never be shown to customers (e.g., cryptic generic messages or user-unfriendly debug logs).

- Durability: Data loss in mission-critical systems (e.g., failing to save).

- Availability: Systems are unavailable when needed to handle requests (e.g., the server cannot be reached).

Why you need a good observability metric

Accurate customer-focused observability metrics serve two purposes:

- North-star: They provide an aiming beacon for improved customer service – they help prioritize, track repair efforts, and focus on the highest-leverage interventions. They can also be integrated into organizational practices (e.g., service reviews, postmortems, etc.) to ensure that subtle deviations do not slip under the radar (e.g., a 0.05% drop in health trends over several weeks)

- Proactive alerting: They are highly accurate canaries that provide early warnings of regressions. Any sudden and sustained drop in the health metric directly correlates to true customer impact. Setting up alerts on these metrics will close production observability gaps.

Now let’s discuss a battle-tested and proven strategy for overcoming this.

The three entities of the CAR framework: Customers, Applications, and Resources

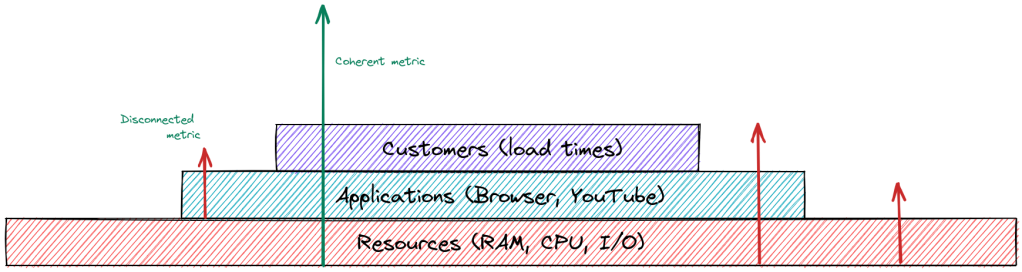

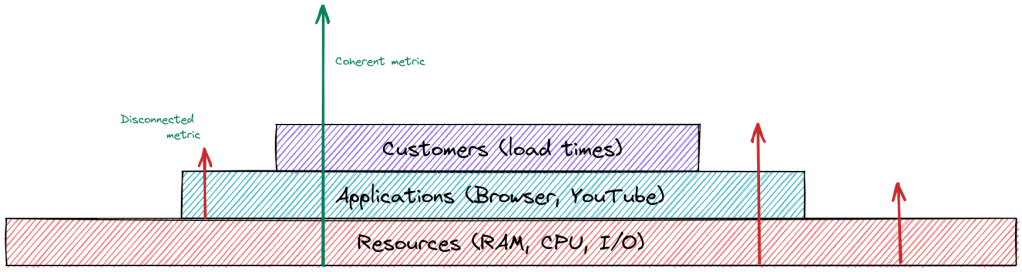

CAR stands for Customers, Applications, and Resources; it offers a solution to the monitoring disconnect by establishing the interactions between the three entities: the user, the application, and the underlying resources.

It ensures overlapping monitoring coverage like the test pyramid ensures test coverage.

- The user: wants to get something done (write a doc, watch YouTube). Satisfaction depends on the application working as expected.

- The application: used to solve a problem; an application might crash or have bugs; also, a perfect application will stumble if starved of resources.

- The resources: Provide suitable hosts for applications e.g., CPU, memory, and I/O; these are needed for applications to run smoothly.

Resources (e.g., virtual machines, caches) form the foundation on which applications are built; in turn, applications are made to address consumer wants.

Using the CAR to highlight the disconnects

Disconnects arise because most monitoring approaches focus on application and resource health to the detriment of customer-facing observability measures.

Most strategies assume that healthy applications and resources guarantee an excellent customer experience; this assumption is not always true. The red arrows in the diagram below show how focusing on a single layer can lead to noisy monitors. The single green line is a way to thread through observability and tie it to customers – a customer-centric measure is the linchpin of successful monitoring strategies.

Outcomes of using CAR

Here are some results of applying this strategy across teams:

- Identification of blind spots: Detecting outages that would have gone unnoticed before. Exposure of long-hidden and long-standing flaws in the system, which in turn enables proper architectural fixes.

- Toil reduction: Orders-of-magnitude drop in incident volumes (primarily due to the elimination of noisy monitors).

- Trust: Alerts signified genuine customer issues, and engineers are motivated to identify the root causes. This was better than superficial treatment for noisy monitors: muting the alarms or restarting machines.

- Proactive execution: the toil reduction from the reduced incident volumes and exposure of architectural flaws helps the team to pivot from reactive firefighting to proactive, focused execution.

Everyone was happier: customers got fewer outages, and engineers got fewer calls.

Conclusion

Most typical monitoring strategies miss the forest for the trees – they zero in on resource or application health and miss out on the most critical question – is the customer satisfied?

If you take only one thing out of this post – it is to ensure your monitoring strategy is tied directly to customer satisfaction – 10 nines do not matter if your users cannot use your app. Are your customers happy?

Upcoming posts in this series will dive into how to roll out CAR across teams.

Don’t miss the next post!

Subscribe to get regular posts on leadership methodologies for high-impact outcomes.